To interpret the proofs found in Pamalogy, a knowledge of symbolic logic is helpful, so here is a guide to some basic concepts for newcomers to the field of logic.

Terminology

The words used in logic have precise meaning. We’ll start with the most basic concepts. We’ll put them in an order that builds, rather than an alphabetical order.

Informal Logic – argumentation and the rules of making sound and valid arguments, as with formal debates or in every day cooperative discourse.

Cooperative Discourse – productive dialog that result in better understanding.

Proposition – a number of premises that support a conclusion.

Argument – a number of propositions that make a case for an assertion.

Formal Logic – argumentation that is reduced to its form for the sake of analysis, expressed with symbols, as with math.

Statements – sentences that make claims about reality. Assertions. Not all sentences are statements. Questions, commands and exclamations are not statements unless they make assertions about reality. Declarative sentences are sentences that make assertions.

Premises – statements that are used to support a conclusion.

Conclusion – An assertion made with the support of a number of premises.

Standard Form – a way of presenting an argument by numbering premises and making the conclusion clear. Parenthetical remarks to the side often accompany the statements line by line, as well, especially where there are intermediate inferences.

Inference – a logical conclusion to premises.

Intermediate Inference – a logical conclusion to premises that the broader argument depends on. In formal logic, it is typically accompanied by parenthetical remarks indicating which premises it depends on.

Enthymeme – a valid or invalid argument that omits a premise, making it necessary to fill in the blanks by working backward from the conclusion.

Fallacies – common errors in logic that bring about invalid and/or unsound arguments.

Valid Arguments – propositions where if each of the premises are true, the conclusion must be true.

Sound Arguments – propositions where each of the premises are true and the argument is valid.

Symbolize – To express statements using symbols, beginning with an alphabetical list of their definitions.

Atomic Sentences – sentences that eliminate connecting words, such as “and”, “or”, “if”, “then”, and “not”, in order to strip ideas down into simple positive statements. The atomic sentence can then easily be symbolized with a capital letter from the alphabet for analysis in formal logic.

Extracting an Argument – finding the conclusion and premises of an informal argument and putting them in a standard symbolized form to determine its validity and which premises need to be defended.

Truth Table – a tool for determining the truth or falsity of all possibilities for the set of variables in a statement.

Entailment – a logical principle that allows us to infer that if one thing is true then another must also be true.

Rules of Inference – a standard set of rules that can be symbolized alongside premises and conclusions to show why statements in an argument are valid.

Logical Operators – connecting words such as “not”, “some”, “and”, “or”, “all”, and “if/then.” We will cover many of these in the nomenclature section below.

Functional Equivalency – when two statements have the same meaning and are distributable in an argument.

DeMorgan’s Rule – a type of functional equivalency for complex distributive and conjunctive statements.

Propositional Logic – arguments including statements like if, then, and, or and not.

Disjunctive Syllogism – A process of elimination. If this or that is true and this is false, then that must be true, or vice versa.

Categorical Logic – arguments about whether there are some, all or no members in various categories.

Categorical Syllogism – A very specific categorical argument representing three categories in a pair of premises. Two of the categories will appear in the conclusion.

Existentials – the existence or supposition that there are some items in a category. Existential claims are valid if at least one item in a set meats the criteria of a claim. A fancy word for “some.”

Universals – claims that can be made about entire categories, such as “all” or “none.”

Disjunction – “or.”

Conjunction – “and.”

Subject – the part of a sentence something is about.

Predicate – the part of a sentence that says something about the subject.

Set – all items in a group of items, aka category.

Series – the list of items in a set, aka as members, sometimes marked in lower case with a number if they are all the same.

Instance – an item in a category.

Contradiction – two assertions or categories that cannot both be true. The conjunction of a proposition with its negation. The result is a logical impossibility

Subaltern – propositions that are implied by other propositions.

Applied Universal Instantiation – if all of a Subject (S) is some Predicate (P), then any instance (s) of that Subject will be an instance of that Predicate.

Existential Generalization – If there is an instance (s) of a subject (S), then there are some of that Subject S, so if s is S and s is P, then some S are P.

Conversion – switching of order from subject to predicate or vice versa. Doing so is valid for Universal Negatives and Particular Affirmatives but invalid for Universal Affirmatives and Particular Negatives.

Obversion – switching from affirmative to negative or from negative to affirmative, while also either adding or subtracting a negation from the predicate.

Contraposition – switching the order of subject and predicate in a statement, while also switching from affirmative to negative or negative to affirmative for the subject, and also either adding or subtracting a negation from the predicate.

Law of Identity – everything is equal to itself (=i).

Variables – symbols representing anonymous particulars, not a fixed constant, but arbitrary.

Existential Instantiation – the principle whereby when we know a particular affirmative or negative claim is true, then we can substitute a variable name for the particular(s) in those categories. (Just be careful not to use the same variable to mean two different things).

Categorical Syllogism – an argument with three categorical propositions (two premises and a conclusion) that has three categorical terms, each of which are used twice.

Minor Term – the subject of an argument that also serves as part of a categorical syllogism’s conclusion.

Major Term – the predicate of an argument that serves as part of a categorical syllogism’s conclusion.

Middle Term – a third categorical term in a statement that is predicated of both the subject and the major predicate individually but does not appear in the conclusion of an argument.

256 Categorical Syllogisms – with the vowels representing the four possible moods, (A,E,I or O) for each of the three statements, (2 premises and 1 conclusion), the are 64 possible combinations of mood. There are also four possible orientations of the middle term in relation to the major and minor terms for each of the 64 moods. 64 x 4 = 256 possible categorical syllogisms.

Fifteen Valid Categorical Syllogisms – of the 256 possible combinations, there are only fifteen that are valid, so logicians typically memorize them for a short cut to determining the validity of syllogisms in standard form. Traditionally, the vowels from a family of Latin names separted into four families according to figure was used as a mnemonic device – (1)Barbara, Celarent, Darii, Ferio; (2)Cesare, Festino, Camestres, Baroco; (3)Datisi, Ferison, Bocardo, Disamis; (4)Fresison, Calemes, Dimaris.

Copi & Cohen’s Six Rules – perhaps an easier way to recognize valid categorical syllogisms in standard form, is to remember the six rules developed by the American logicians Irving Copi (1917-2002) and Carl Cohen in their 1953 work, Introduction to Logic.

Conditionals – “if”/”then” statements.

Antecedents – the “if” part of a conditional.

Consequent – the “then” portion of a conditional.

Material Conditionals – every day conditionals pertaining to the apparently real world.

Counterfactual Conditionals – “If”/”then propositions that speculate using “what if” or other imaginary conditions for the sake of logical arguments. They’re true if the consequent would have been true in the imagined condition, everything else being the same, so long as the antecedent is true.

Logical Possibility – any proposition, real or imaginary, that is not a contradiction.

Logically Necessary – a proposition that would be contradictory, and therefore impossible, if it was false.

Logically Contingent – anything that is logically possible but not logically necessary.

Possible World – in counterfactual conditional logic, this has the specific meaning of a logical possibility.

Nearest Possible World – everything else being equal, what if this one difference were true?

Counter Examples – examples which prove an argument would not be valid in every case.

Strict Conditionals – conditionals that utilize logical necessities to produce statements to the effect “if something were true, then something consequent would necessarily be true.” The antecedent logically entails the consequent. For a strict conditional to be true, every possible world in which the antecedent is true must be a world in which the consequent is also true. If there is any possible world in which the antecedent is true but the consequent is false, then the strict conditional is false. There can be no valid counter examples.

Definitional Claims – propositions for possible worlds in which a defined term would exist based on the inferences related to it.

Necessary Conditions – everything which would instantiate a defined term must fall into the category which is a necessary condition. (all instances qualify)

Sufficient Conditions – anything which falls into the category which is a sufficient condition must be an instance of the thing being defined. (all qualifiers are instances)

Biconditionals – a conditional that has two conditional statements in one so that the antecedent also serves as the consequent for its consequent and the consequent also serves as the antecedent for its antecedent.

Material Biconditionals – statements about the real world, whatever that is, where the converse conditional is true.

Strict Biconditionals – statements about possible worlds in which there is no possibility in which if an antecedent is true and a consequent is true and if an antecedent is false and a consequent is false, that the converse won’t also be true.

Truth Functional Truth – a necessary truth wherein every possible scenario results in the truth of the statement.

Modus Ponens – in a conditional statement, if the antecedent is true, the consequent is true. Therefore, the antecedent can be eliminated.

Affirming the Consequent – not to be confused with affirming the disjunct, to affirm the consequent is to suppose that if a consequent is true that an antecedent is necessarily true. (That would be a fallacy).

Material Contraposition – the valid argument for swapping and negating the antecedent and the consequent, or of de-negating or double negating both antecedent and consequent during the swap.

Communication of Conditionals – a fallacy that fails to negate either the antecedent or the consequent or both during a material contraposition resulting in an invalid statement.

Modus Tollens – If the negation of a consequent is true, then the negation of the antecedent must be true. This counter-intuitive argument can be proven valid by combining modus ponens with material composition.

Denying the Antecedent – a fallacy often committed when applying modus tollens to an antecedent without knowledge of its negated consequent. The negation of the consequent must first be known. The mistake is also made when hastily treating a conditional as a biconditional.

Implication – “not this or that” is equivalent to saying “if this, then that”.

Conditional Proof – Also known as “conditional assumption” because it begins with the statement “if this were true” or “if that were true” …

Provisional Assumption – The “what if” part of a conditional proof; the part that is assumed for the sake of a thought experiement.

Hypothetical Syllogism – A shortening of dependent conditional statements linking antecedents with consequents from first to last in a chain.

Indirect Proof – A proof accomplished through the negation of the proof being sought by showing a contradiction

Disjunction Elimination – Elimination of a disjunction as a conditional

Reductio ad Absurdum – proof by contradiction that an argument must be invalid, thus unsound.

Proof by Contradiction – an indirect proof, or disproof, accomplished by demonstrating a contradiction.

Negation Introduction – When an affirmative provisional assumption is introduced and. because of a contradiction the conclusion negates it.

Negation Elimination – When a negative provisional assumption is introduced and because of a contradiction the conclusion negates the introduced negation with an affirmative.

Disconfirming a Universal Claim – since universal statements could always have exceptions, making it impossible to say all of something is something (if the nature of that thing could not be enumerated), a claim can be disproven by finding an exception to the rule.

A Priori – Rational inference that does not stem from experience.

A Posteriori – Inferential knowledge that stems from experience.

Deductive Argument – inferential arguments that must be true because they are comprised purely of a priori logic.

Inductive Argument –

Abductive Argument – the best explanation

Reference Class – the examples in a set used to support an inductive argument.

Relevant Analogy – valid supporting evidence for inductive arguments that exists when the characteristics of two things being compared are similar in relevant respects to the characteristic cited in the conclusion.

Relevant Disanalogy – valid supporting evidence for inductive arguments that exists when the characteristics of two things being compared are not similar in relevant respects to the characteristic cited in the conclusion.

Deductive Nomological Model – an inductive argument that takes the form of a deductive argument by posing a scientific law as a premise on which the inferences rely.

Nomenclature

The particular way of expressing things in formal logic works with commonly held conventions that philosophers use universally, as with mathematical symbols, or musical notation. The rules need to be learned and the meaning of each symbol must be defined, so here are some examples and samples of use. First, a summary table you can use as a tool and then some general ideas…

Table of Arguments

| Symbol | Definition Name | Meaning of Rule | Example of Rule | Notes |

|---|---|---|---|---|

| Reit. | Reiteration | Any true previous statement | 1. x 2. x (1, Reit.) | Use it to move a premise closer to a another rule in the making. |

| DN | Double Negative | Double negatives make a positive | 1. ~x 2. ~~~x (1, DN) | Any odd number of negatives is negative. Even is positive. |

| ^E | Conjunction Elimination | If multiple statements are true, their individual statements are true | 1. P ^ Q 2. Q (1, ^E) 3. P (1, ^E) | Refers to whole statements and doesn't imply sequence |

| ^ I | Conjunction Introduction | Two true statements can be conjoined as conjuncts | 1. P 2. Q 3. P ^ Q (1, 2, ^I) | |

| ∨I | Disjunctive Introduction | If a statement is true, anything can be added to with "or" and it will still be true | 1. P 2. P v X (1, vI) | ∨I is valid but ∨E is not valid |

| DEM | DeMorgan's Law | Converts neither nor and both not statements to functional equivalents | 1. ~P ^ ~Q 2. ~(P ∨ Q) (1, DEM) 3. ~P ^ ~Q (2, DEM) 1. ~P ∨ ~Q ("not both") 2. ~(P ^ Q) (1, DEM) 3. ~P ∨ ~Q) (2, DEM) | Example 1: ("neither nor" AKA" both not") Example 2: "not both" |

| DISTRIB | Distribution | Expresses a functional equivalency for two conjuncts a main disjunct connector or two sets of disjuncts with a main conjunct connector | 1. (A ^ B) ∨ (A ^ C) 2. A ^ (B ∨ C) (1, DISTRIB) 3. (A ^ B) ∨ (A ^ C) (2, DISTRIB) 1. (A ∨ B) ^ (A ∨ C) 2. A ∨ (B ^ C) (1, DISTRIB) 3. (A ∨ B) ^ (A ∨ C) (2, DISTRIB) | First example is a conjunctive distribution. Second example is a disjunctive distribution. |

| DS | Disjunctive Syllogism | process of elimination | 1. A ∨ B 2. A (1, DS) 3. B (1, DS) | Don't affirm disjuncts! 1 A ∨ B 2. B (≠~A) |

| AUI | Applied Universal Instantiation | If all S are P, then any instance (s) of S is also P | 1. S is P 2. s is an S 3. s is a P (1,2, AUI) | |

| EG | Existential Generalization | Instances mean some. | 1. s is S 2. s is P 3. Some S is P (1,2, EG) | More simply ... 1. s is S 2. Something is S (1, EG) |

| EI | Existential Instantiation | We can insert a variable name for a particular for anonymous reference | 1. Some S are P 2. ‘b’ is S & ‘b’ is P (1, EI) | Make sure the meaning of the variable doesn't change during the argument! |

| = I | Law of Identity | Everything is equal to itself | 1. a = a (1, =I) | Useful for emphasis |

| = E | Identity Elimination | Whatever applies to things with multiple names can be said of each. Indiscernability of identicals. | 1. a is P 2. b = a 3. b is P (1, 2 =E) | Not to be confused with Reit. or =I |

| CONV | Conversion | Cases where reversing the order of subject and predicate is valid (A & O) | Valid Conversions: 1. No S are P 2. No P are S (1, CONV) 1. Some S are P 2. Some P are S (1, CONV) | INVALID Conversions: 1. All S are P 2. All P are S (1, CONV) 1. Some S are not P 2. Some P are not S (1, CONV) |

| OBV | Obversion | Flipping quantity of subject and negating predicate is valid. | 1. All S are P 2. No S are non-P 1. No S are P 2. All S are non-P (1 OBV) 1. Some S are P 2. Some S are not non-P 1. Some S are not P 2. Some S are non-P | AEIO are all valid obversions |

| CTRA-CG | ContraPosition of a Categorical | Reversing order while negating subject and predicate is valid for E & I | Valid Contrapositions: 1. All S are P 2. All Non-P are Non-S (1, CTRA-CG) 1. Some S are not P 2. Some non-P are not non-S (1, CTRA-CG) | INVALID Contrapositions 1. No S are P 2. No non-P are non-S (1, CTRA-CG) 1. Some S are P 2. Some non-P are non-S (1, CTRA-CG |

| CTRA-MA | ContraPosition of a Material Conditional | Reverses the order while negating both the antecedent and consequent | 1. P → Q 2. ~Q → ~P (1 CTRA-MA) 1. ~Q → ~P 2. P → Q (1 CTRA-MA) | Useful for proofs. Can be used to denagate as well as negate in the swap |

| QN | Quantifier Negation Law | No S are P ≡ All S are non-P | 1. No S are P 2. All S are non-P (1 QN) 3. No S are P (2 QN) 1. Not All S are P 2. Some S are not P (1 QN) 3. Not All S are P (2, QN) | All S are P ← CONTRADICTS → Some S are not P No S are P ← CONTRADICTS → Some S are P |

| CS | Categorical Syllogism | A conclusion with 2 premises that include a minor, a major and a middle term and the minor and major term are the conclusion. | 1. All S are M 2. All M is P C. All S is P 1. No S are M 2. All P are M C. No S are P 1. No S is not M 2. All M is not P C. No S is P | Has six rules and corresponding fallacies. 1. can't have 4 terms 2. middle term distributes to at leas one premise 3. terms distributed in the conclusion distributed in premises 4. at least one affirmative premise 5. no negative premises for affirmative conclusions 6. Universal premises mean a Universal conclusion |

| CS AAA-1 | Categorical Syllogism Barbara | A valid categorical syllogism in the AAA mood and first form | 1. All M are P 2. All S are M C. All S are P | Look for premise swaps: 1. All M are S 2. All P are M |

| CS AEE-1 | Categorical Syllogism Calerent | A valid categorical syllogism in the AEE mood and first form | 1. All M are P 2. No S are M C. No S are P | Look for premise swaps: 1. No S are M 2. All M are P |

| CS AII-1 | Categorical Syllogism Darii | A valid categorical syllogism in the AII mood and first form | 1. All M are P 2. Some S are M C. Some S are P | Look for premise swaps: 1. Some S are M All M are P |

| CS EIO-1 | Categorical Syllogism Ferio | A valid categorical syllogism in the EIO mood and first form | 1. No M are P 2. Some S are M C. Some S are not P | Look for premise swaps: 1. Some S are M 2. No M are P |

| CS EAE-2 | Categorical Syllogism Cesare | A valid categorical syllogism in the EAE mood and second form | 1. No P are M 2. All S are M C. No S are P | Look for premise swaps: 1. All S are M 2. No P are M |

| CS EIO-2 | Categorical Syllogism Festino | A valid categorical syllogism in the EIO mood and second form | 1. No P are M 2. Some S are M C. Some S are not P | Look for premise swaps: 1. Some S are M 2. No P are M |

| CS AEE-2 | Categorical Syllogism Camestres | A valid categorical syllogism in the AEE mood and second form | 1. All P are M 2. No S are M C. No S are P | Look for premise swaps: 1. No S are M 2. All P are M |

| CS AOO-2 | Categorical Syllogism Baroco | A valid categorical syllogism in the AOO mood and second form | 1. All P are M 2. Some S are not M C. Some s are not P | Look for premise swaps: 1. Some S are not M 2. All P are M |

| CS AII-3 | Categorical Syllogism Datisi | A valid categorical syllogism in the AII mood and third form | 1. All M are P 2. Some M are S C. Some S are P | Look for premise swaps: 1. Some M are S 2. All M are P |

| CS EIO-3 | Categorical Syllogism Ferison | A valid categorical syllogism in the EIO mood and third form | 1. No M are P 2. Some M are S C. Some S are not P | Look for premise swaps: 1. Some M are S 2. No M are P |

| CS OAO-3 | Categorical Syllogism Bocardo | A valid categorical syllogism in the OAO mood and third form | 1. Some M are not P 2. All M are S C. Some S are not P | Look for premise swaps: 1. All M are S 2. Some M are not P |

| CS IAI-3 | Categorical Syllogism Disamis | A valid categorical syllogism in the IAI mood and third form | 1. Some M are not P 2. All M are S C. Some S are not P | Look for premise swaps: 1. All M are S 2. Some M are not P |

| CS EIO-4 | Categorical Syllogism Ferison | A valid categorical syllogism in the EIO mood and fourth form | 1. No P are M 2. Some M are S C. Some S are not P | Look for premise swaps: 1. Some M are S 2. No P are M |

| CS AEE-4 | Categorical Syllogism Calemes | A valid categorical syllogism in the AEE mood and fourth form | 1. All P are M 2. No M are S C. No S are P | Look for premise swaps: 1. No M are S 2. All P are M |

| CS IAI-4 | Categorical Syllogism Dimaris | A valid categorical syllogism in the IAI mood and fourth form | 1. Some P are M 2. All M are S C. Some S are P | Look for premise swaps: 1. All M are S 2. Some P are M |

| MP | Modus Ponens | The antecedent must be true if the consequent is true. | 1. P → Q 2. P C. Q (1, 2 MP) | The order of premises doesn't matter |

| MT | Modus Tollens | If the consequent is false, the antecedent is false | 1. P → Q 2. ~Q C. ~P (1, 2 MT) | The order of premises doesn't matter |

| IMP | Implication | If/then is logically equivalent to not this or that and vice versa | 1. ~P v Q 2. P → Q (1, IMPL) 1. P → Q 2. ~P v Q (1, IMPL) | Converts conditionals to disjuncts and vice versa |

| NEGCON | Negated Conditional | A negated if this then that is logically equivallent to "this and not that" | 1. ~(P → Q) 2. P & ~Q (1, NEGCON) 1. P & ~Q 2. ~(P → Q) (1, NEGCON) | Not too useful but works both directions |

| CP | Conditional Proof | A proof derived from a hypothetical provisional assumption, AKA "conditional introduction" | 1. T → Y 2. Y → Z 3. | Ṯ (ASSUME) 4. | Y (1, 3 MP) 5. | Z (2, 4 MP) 6. T → Z (3-5 CP) | Mark the "scope" of the hypothetical statements with fitch marks (|). |

| ASSUME (assumption) | Assumption | The hypothetical line, (the first line), in a categorical proof | 1. T → Y 2. Y → Z 3. | Ṯ (ASSUME) 4. | Y (1, 3 MP) 5. | T (2, 4 MP) 6. T → Z (3-5 CP) | Mark the assumption with an underline - Ṯ |

| → I | Conditional Introduction | Conditional Proof, hypothetical or provisional assumption, common nomenclature instead of CP. | 1. T → Y 2. Y → Z 3. | Ṯ (ASSUME) 4. | Y (1, 3 MP) 5. | Z (2, 4 MP) 6. T → Z (3-5 → I) | → I has the same meaning as CP. The term "introduction" means the hypothetical has been introduced |

| | | Scope Indicator | “fitch bars”, “scope lines”, or “assumption lines” mark assumptions and consequents based on them. | 1. | P … 𝓃. | Q 𝓃+1. P → Q (1-𝓃, CP) | 𝓃is the number of statements. The statement following the scope is the "discharge" line, 𝓃+1. P → Q (1-𝓃, CP) |

| vE | Disjunction Elimination | allows hypotheticals to be derived from conjunctions | 1. P v Q 2. | P 3. | R 4. | Q 5. | R 6. R (1, 2-3, 4-5, vE) | Must underline each assumption and deliniate with scope bars |

| HS | Hypothetical Syllogism | A series of interdependent conditionals that treat the consequents of middle conditionals as antecedents of the next | 1. A → B 2. B → C (1, MP) 3. C → D (2, MP) 4. A → D (1-3 HS) | only requires scope bars if an assumption is made. Otherwise, the summary syllogism statement HS will suffice |

| EXPO | Exportation | A conjunction in the antecedent equals a conditional with one conjunct in the antecedent, and the other conjunct as the antecedent of a conditional in the consequent. | ((P & Q) → R) ≡ (P → (Q → R)) | Double and triple fitch bars may be required for nested provisional conditionals |

| ~I | Negation Introduction | Negates a previously introduced provisional assumption due to a contradiction | 1. | P (ASSUME) … | n. | Q & ~Q n+1. | ~P (1-n, ~I) | Q & ~Q can be on separate lines. 1. | P (ASSUME) 2. | Q 3. |~Q 4. ~P (2, 3 ~I) |

| ~E | Negation Elimination | When the assumption is assumed false, resulting in a contradiction, the negation is eliminated | 1. | ~P (ASSUME) … | n. | Q & ~Q n+1. | P (1-n, ~E) | Difference between ~I and ~E is ~I negates an affirmative assumption, while ~E negates a negative one, proving it positive. |

Be Methodical

Let’s start with a word of advice. If you’ve ever worked out a math problem, you know some concentration is required. To think through each step, you may have to take it slow. It isn’t like everyday conversation that you can easily whiz through. Being smarter isn’t just a matter of fast talk and thinking on your feet. That may come in handy in other contexts, but in logical thought, the analytical side of your brain is what is needed. Careful, systematic, methodical deliberation is the rule. Being methodical, we will derive sound and complete arguments using the rules of inference step-by-step, proving their validity as we go, using tools like Truth Tables and Venn Diagrams, as described below. By complete we mean that for every conclusion proven valid using a Truth Table or a Venn Diagram, there will be some combination of rules we will use, going step-by-step and inference-by-inference, to derive that conclusion.

Sufficient Definitions.

Start with a special dictionary for your argument. Begin with a “let” statement. Reduce unnecessary definitions. They should be as concise as possible without excluding any essential parts. Be as explicit as the argument requires but no more. Example,

“Let S be “spaghetti is made of spinach.”

This statement is concise but is it explicit enough? Maybe we need to define spinach or spaghetti. We also didn’t say all spaghetti is made of spinach. Some spaghetti is made of spinach can be assumed in this statement. But why would we need to define spaghetti or spinach? We probably don’t unless, in context, we have been having some debate about what constitutes spaghetti or spinach. Context determines the extent to which more explicit definitions are needed. Ambiguity also results for relative words like “tall” or “close” and “left” or “right.”

In Pamalogy, we teach that all good things take place in one Universe, or possibly another. In classical logic, one Universe is assumed to be real. We won’t make that assumption. This will affect the extent to which we make certain definitions.

Classical logic also avoids implicatures. When we imply something in every day conversation just to be polite or to be witty, we may not say what we mean. Dr. Watson puts it this way: “indirect speech, or implicature, involves “flouting” the rules of quantity, quality, manner, and relevance.” (Watson 2022). We’re not going to do that here.

Precision with respect to logical operators is particularly important. Not~P doesn’t mean the opposite of P even if the opposite of P is true. “Some” As are Bs is defined as one or more As and one or more Bs. Even though it is expressed as a plural, “some” could mean one. “Some” As are Bs might also imply “not all” As are Bs but even if all As are Bs, it is still true that “some” As are Bs. “Every” A is B may also refer to just one A, even if “every” tends to imply more than one in every day contexts – not so in standard classical logic. In fact, even if there are no As or Bs at all, zero, it may still be true that every A is B.

There’s more. “A and B” does not mean “A and then B.” Sequence is not implied by the order presented. “B and A” is equivalent to “A and B.” This is a “conjunction.” Then there is the “disjunction.” By default, “A or B” could mean both A and B. This is called the “inclusive or.” If it means it is one or the other but not both, then the fact that only one choice is available will be specified. This would be called the “exclusive or.” Please note that if you see the word “unless,” this will imply the inclusive or, not the exclusive or. Also using the words, “either” or “else” won’t change the truth value of a disjunctive, which is always an inclusive or by default.

Now, for your reference, A and B, as given in the examples just mentioned, are called “disjuncts“ when in disjunction and “conjuncts” when in conjunction, and these terms will eventually come up. Also keep in mind with respect to any of this, impossibility makes no difference. “A or B” does not have to mean that either A or B is anything possible.

Another peculiar subject is the conditional. Be warned that “if A then B” does not require causality. What makes “if A then B” true is that either A is false and B is true or false, or both A is true and B is true. But A and B is just one example and we could have said “if X then Y” or anything else. For a more consistent nomenclature for conditionals, given that any variables could be used, we’ll call the “if” side the “antecedent” and the “then” side the “consequent.”

Bivalence – you should also be aware that in classical logic, there are two truth values. Either something is true or something is false. There is no in between. This is what we call “the law of the excluded middle.” If it seems like there is something in between, (in other words not either true or false), the problem is you didn’t create a precise enough definition. You need to disambiguate so you don’t equivocate. Observe the virtue of consistency in meaning what you say. We’ll use the classical system of logic here as it is a recognized standard. The classical system of logic entails assuming bivalence. In addition to the “law of the excluded middle,” bivalence also entails the “law of non-contradiction.” This means that a statement can’t be both true and false. It is neither in between true and false nor both true and false. Be consistent, define your terms precisely, and you’ll be playing by the fair rules of classical logic.

Hypotheticals

For the sake of analysis, if a statement is made here, it is assumed to be true, even if it sounds impossible, unless it is self contradictory. We will assume for the sake of argument that at least in terms of form and analysis using the classical system, whether a statement is true or false matters less than what the truth or falsity of a statement would have for the logical inferences that would accompany it. In fact, it doesn’t matter at all. Think of math. If we say 2+3=5, 2+3 will equal 5, this is a valid argument regardless of whether we miscounted or lied when we came up with 2 and 3 as part of that statement. The truth value of 2 or 3 is not what we are discussing. 5, in its relation to any real 2 and 3, is what we are discussing.

The nomenclature of formal logic will utilize symbols given in definitions to shorten propositional statements into something looking like mathematical formulas, which for the sake of analysis, we will presume are true, whether they are or not. Introducing one concept at a time below, we’ll start with the most obvious ideas and increase complexity and difficulty as we go.

Rule of Reiteration (Reit.) – a statement is the same as itself.

- S

- S (1 Reit.)

Truth table for Reiteration – This is the truth table for a reiteration. Let S be a Statement on line 1. Let S be reiterated in line 2. If S is true in line 1, then S is true in line 2 and if S is false in line 1, then S is false in line 2.

| 1. S | 2. S |

|---|---|

| T | T |

| F | F |

In the Truth Table above, S could mean “statement”, and the statement could be “spaghetti is made of spinach” by prefacing the formal statement with “Let.” Notice that form is what matters for formal arguments. Whether S means “statement” or S means “spaghetti is made of spinach,” the Truth Table yields the same results.

The point is to make S or any symbol used easy to remember. Some propositions are more complex. Some have multiple statements. To avoid confusion, a good guideline, or convention, is to start with the first noun in the first premise. Then for a second premise, if the first noun begins with the same letter as the first, use the first adjective, adverb or verb. For any numbered premise, if the best choice is already taken by a previous statement, use the next available, adjective, adverb, verb (NAAV) so that each statement has a unique identifying symbol. Avoid symbolizing prepositions and don’t use any connecting verbs like “I” for “is,” “A” for “are.” Finally, you may run out of letters in the alphabet. If that happens, your proposition is probably too long. Assuming the length of your argument and its complexity are necessary, you can always use numbers like N1 and you’ll never run out of statements you can represent with symbols.

Negation

Negation is symbolized with the “~” sign and sometimes the “-” sign. Sometimes it is printed “Not ~” but that should be avoided as it can be misconstrued as a double negative when that is not intended. The negation sign is followed by a letter for the statement that was defined in the “Let” section of your formal argument. Be aware that the negation sign does not mean opposite. The opposite of John is smart might be John is stupid. But not all people who are not smart are stupid. A person with average intelligence might not be categorized as smart, but neither would they be categorized as stupid. Our goal is to determine whether a statement is true or false. If something is not true, then it is false. If it is false, then it is not true. As for double negatives, if it is not not true, then it is true, and if it is not not false then it is false. Any even number of negations negates the negation of an atomic statement. Any odd number of negations serves as a negation of the atomic statement. While this may sound unnecessarily confusing at first, it actually helps simplify complex statements and determine their truth or falsity.

Truth Table for Simple Negation – For any statement S: If S is true, ~S is false. If S is false, ~S is true.

| S | ~S |

|---|---|

| T | F |

| F | T |

Truth Table for Multiple Negation – For any proposition P: If P is true, odd# of ~P is false, even# of ~P is true.

| P | ~P | ~~P | ~~~P | ~~~~P | ~~~~~P |

|---|---|---|---|---|---|

| T | F | T | F | T | F |

| F | T | F | T | F | T |

Rule of Double Negation (DN) – a double negative for any proposition P is equivalent to proposition P

- P

- ~~P (1, DN)

- ~~P

- P (1, DN)

Warning: the rule of double negation applies to whole statements, not to individual words within statements. For example, let C = “Children are unhappy when they eat vegetables.” ~C does not mean “Children are happy when they don’t eat vegetables.” ~C means “it is untrue that children are unhappy when they do eat vegetables.” The double negation then means “it is untrue that it is untrue that children are unhappy when they eat vegetables.”

Second Warning: negations are not opposites. In the statement above, neither does the negation ~C mean “children are happy when they eat vegetables” nor does it mean “children are happy when they don’t eat vegetables.” Leave the word unhappy alone and let the negation apply to the whole statement. The word “unhappy” is part of the statement that is either true or false. Negation means the proposition is untrue. In the bivalent mindset of classical logic, we are looking for truth or falsity of whole statements, not opposites.

Proving the Validity of Double Negation

A conclusion is usually labeled as C, while the premises are numbered as in these two DN propositions …

1. P

C. ~~P (1, DN)

1. ~~P

C. P (1, DN)

To use a Truth Table for proof of validity, follow these steps …

Step One: begin with the header. Atomic P is the first column header. The premise 1.P or 1.~~P is inserted into the header column where appropriate as follows …

| P | 1. P | ~P | C. ~~P |

| P | ~P | 1. ~~P | C. P |

Step Two: fill in the truth values. If P is true goes in the first row so enter T. If P is not true, then it is false. So enter F in the second row. Then go to the next column. If that says P, then it is a reiteration and the truth value is the same as for P. If it says ~P, then it is false.

| P | 1. P | ~P | C. ~~P |

|---|---|---|---|

| T | T | F | T |

| F | F | T | F |

| P | ~P | 1. ~~P | C. P |

|---|---|---|---|

| T | F | T | T |

| F | T | F | F |

The negation of the negation will produce a reversal of the T row and F row of ~P for ~~P. Complete the table.

Step Three: Test the Conclusion column. Find any rows with T in the conclusion column. Then look up each premise in those rows. In this case there is only one T and one premise. If the premise for that row is T, then the Conclusion column proves the argument is valid.

Truth Functional Equivalence – Now notice that not only are C. and 1. the same for row one, where both the premise and conclusion are true, but also for row two, where both the premise and conclusion are false. This happens in both tables. In a more complex table with more rows to test the truth or falsity for each possibility, every row would have to match, not just one, for every possible path to the conclusion. Two sentences A and B are truth functionally equivalent if and only if their corresponding columns have the same truth value assignment (T or F) on every row of the truth table.

Proving an argument invalid – An argument is valid if it cannot be false so long as the premises are true. That means that if we wish to test for invalidity, just find every row where a premise is true. In that row, the conclusion must also be true. If the premise is true but the conclusion is false, then the argument is invalid.

Disjunction

The inclusive “or” operator is indicated with a symbol that looks like the letter “v” but it is really a wedge symbol (∨). Imagine a wedge between two possibilities. If it is an exclusive “or” the wedge will have a line underneath it ( ⩡ ). If you are trying to type this using a Mac, Ctrl tap or Ctrl click will bring up a menu that includes an “emojis and symbols” table. Find all your logic symbols there and insert them into your text. Otherwise, you may wind up with serifs in your “v” or underlined “v“. There will be other symbols discussed below that won’t easily be found on a common keyboard, so this is advised from the start. If you use a PC, you may need to use ASCII codes, if you don’t have an application program or plugin to search through.

Multiple Disjunctions

Things start getting confusing once we create multiple disjunctions in our statements, such as A ∨ B ∨ C. Just follow these rules and everything will make sense. We’ll break each part down into simple sentences and then look at it in a Venn Diagram or Truth Table. To do this, we need to simplify the multiple disjunction into a single disjunction by placing a parenthesis around two of the disjuncts. So, A ∨ B ∨ C is equivalent to A ∨ (B ∨ C), which is also equivalent to (A ∨ B) ∨ C. These three statements are logically equivalent and, in fact, we could also change the order of the disjuncts so that all of the following are logically equivalent as well:

4. A ∨ C ∨ B

5. B ∨ A ∨ C

6. B ∨ C ∨ A

7. C ∨ A ∨ B

8. C ∨ B ∨ A (and then also with parenthesis … )

9. (A ∨ C) ∨ B

10. (B ∨ A) ∨ C

11. (B ∨ C) ∨ A

12. (C ∨ A) ∨ B

13. (C ∨ B) ∨ A

14. A ∨ (C ∨ B)

15. B ∨ (A ∨ C)

16. B ∨ (C ∨ A)↔︎17. C ∨ (A ∨ B)

18. C ∨ (B ∨ A)

Let’s say P = A ∨ B ∨ C. P contains eighteen total possible formulations. Now imagine how many possible combinations there would be for a statement with more than three disjuncts. Fortunately, since any one will work the same way, given that they are all logically equivalent, we can focus on reducing statements to single disjunctives using brackets to group expressions into single symbols of ideas, withou worrying about the order. Thus all we have to do to make A ∨ B ∨ C ∨ D manageable is add two brackets (A ∨ B) ∨(C ∨ D). We can also place brackets within brackets without changing truth value, if we choose. Thus (A ∨ B) ∨(C ∨ D) is logically equivalent to (A ∨ (B ∨(C ∨ D)), which is logically equivalent to ((A ∨ B) ∨C) ∨ D.

Logical Equivalence – you may have noticed some frequent use of the expression “logical equivalence” here. The paragraphs above could have been shortened with the symbol for logical equivalence. This can be expressed in a number of ways. Some use double pointing arrows ( ↔︎ ) or ( ⟺ ) and others use four dots in a square formation ( ∷ ), while still others use the “E” symbol. This last expression places the variables together on the right side of the ” ⋿ ” so it might read “⋿AB.” We won’t use any of those conventions here. Instead, we prefer the triple equals sign ( ≡ ). So, for the remainder of this guide page, we’ll use ” ≡ ” to express logical equivalency. By this expression we mean that the truth value of what is on the right side of the symbol ( ≡ ) is the same in every way as the truth value of what is on the left side of it. Conversely, the value on the left is the same in every way as the value on the right. So P ≡ Q means the same thing as Q ≡ P. In fact, the same bracketing method we used to simplify disjunctives, we could also use to simplify logical equivalencies. So we could say that if what we just said is true, then (P ≡ Q) ≡ (Q ≡ P). And it is true, which means this is a sound and valid argument.

Logical equivalence always implies more than one expression is true. The eighteen versions of the expression A ∨ B ∨ C above could have been expressed as eighteen separate disjuncts, each representing an equivalent statement so that if each item on the list was a premise P1 … P18 starting with P1 ≡ A ∨ B ∨ C, then P1 would be ≡

P1 ∨ P2 ∨ P3 ∨ P4 ∨ P5 ∨ P6 ∨ P7 ∨P8 ∨P9 ∨ P10 ∨ P11 ∨ P12 ∨ P13 ∨ P14 ∨ P15 ∨ P16 ∨ P17 ∨ P18

The problem withe very long expressions like this is that to test their truth value, we need to work with one point at a time. There are many bracket combinations we could use to express the same thing. Fortunately, it doesn’t matter where we place our brackets, so long as we reduce it all to a single disjunctive. As an example, the long multiple disjunctive above would be ≡

P1 ∨ (P2 ∨ (P3 ∨ (P4 ∨ (P5 ∨ (P6 ∨ (P7 ∨ (P8 ∨ (P9 ∨ (P10 ∨ (P11 ∨ (P12 ∨ (P13 ∨ (P14 ∨ (P15 ∨ (P16 ∨ (P17 ∨ P18))))))))))))))))

You probably noticed it’s neatly ordered from left to right. This makes it clear how many levels of brackets there are inside of each other. It also makes it possible to work in an orderly fashion to complete Truth Tables. Sometimes problems are given to us that are complex by nature and not much forethought to order will seem to exist in it, but we might be able to sort through it and re-arrange it so that it is easier to work with. All of this logical equivalency is very helpful in that regard.

Rephrasing – another trick for making expressions easier to work with is to break complex statements apart into separate statements. So, let’s say three people are in a room – Ellen, Fanny and Gertrude – and one of them passes gas. Is it true that whoever smelt it dealt it? One thing we do know. Either Ellen, Fanny or Gertrude passed gas. Let E mean “Ellen farted”, F mean “Fanny farted” and G mean “Gertrude farted”. Now all you have to say is E ∨ F ∨ G.

Now add a bracket for the multiple disjunction above. E ∨ (F ∨ G). Working with this simplified statement, we’re ready to roll because (F ∨ G) could itself be expressed as a single symbol, you could define with any letter. You might, for instance define Z as (F ∨ G) and say, “Z ≡ (F ∨ G).”

Conjunction

The logical operator “and” is often expressed as an ampersand, (&), because it is readily available on keyboards. The upside down wedge, ( ⋀ ) looks like the Greek lambda in capistule form ( ᴧ ) but that’s not what it is. Notice there are no serifs in the wedge, but there might be if you use serif style fonts in the capistule lambda. The upside down wedge image is supposed to indicate two sides connecting together and can be found above the “6” key on your keyboard. You might think all these choices could be avoided. Why not use a plus sign (+)? Shouldn’t that be the operator of choice for conjunctions? But no, because very often “and” combines two different kinds of truth and doesn’t amount to apples to appleas addition. Using the “+” sign would probably be confusing. We’ll reserve the plus sign for mathematical equations and refrain from using it as a logical operator. You might also find some logicians use the dot ( • ). This can easily be confused with a multiplication symbol, so we’ll avoid that too. Here we’ll use the conventional upside down wedge ( ⋀ ) to express “and” and its equivalent conjunctions, “also”, “in addition to”, “additionally”, “simultaneously”, “plus”, “moreover”, “together with” etc. Some words form conjunctions that might surprise you, like “but” and you should also be aware that use of repeated and equivalents, like “and also” doesn’t make a double conjunction unless it is conjoining to different atomic statements.

Multiple Conjunctions

“And also” may be just one conjunction indicator when interpreting pros sentences, but there are still sentences that result in multiple conjunctions and like disjunctions and other multiple and complex statements, so they can be simplified by using parenthesis.

Let A mean “And also may be just one conjunction indicator when interpreting pros”

Let S mean “there are still sentences that result in multiple conjunctions”

Let L mean “like disjunctions and other multiple and complex statements, they can be simplified by using parenthesis”

A ⋀ S ⋀ L ≡ (A ⋀ S) ⋀ L ≡ A ⋀ (S ⋀ L)

Now, as with disjunctions, we can change the order of the variables in a conjunction without changing any truth value for logical equivalency. However, it is always a good practice to stick to the order the variables were defined as, and to define in the order of the pros sentences the definitions come from, as it is easier to follow that way.

Eliminating Conjunctions

Since in a conjunction, both conjuncts are true, letting ^E mean that a conjunction was eliminated, the following proposition is therefore sound and valid:

- P ^ Q

- P (1, ^E)

- Q (1, ^E)

This also works in reverse. When it does it is called the “conjunction introduction” (&I). The individual conjoins are both true, so they must be individually true. That is:

- P

- Q

- P ^ Q (1,2, &I)

The fact that conjunctions mean the conjuncts are both true does not always work unless it is the main connector in a sentence. For instance, (P & Q) ∨ R contains a conjunction but is not valid because the conjunction is not the main connector. The main connector is the disjunct (∨). Similarly, the conjunctive ( ^ ) is not the main connector in ~(P ^ Q). The negation connector ( ~) is.

Fallacy of Predicate Conjunction – One thing to watch out for is what is actually meant by P ^ Q. If Paul marries Quinn, P ^ Q would be true for “Paul got married” and “Quinn” got married but the statement does not mean “Paul and Quinn got married” in the sense of marrying each other. It only says they both got married, not necessarily to each other. Also, in most contexts, to say “Paul married Quinn” would mean “Quinn married Paul.” But suppose there was a culture where there was polygamy, or some legal or sacramental process that involved complicated stages in a marital union, divorce or annulment. Then it would not necessarily even mean that if Paul married Quinn that Quinn married Paul. In those contexts, it might be true that P ^ Q could be interpreted that way, but either way, what the rules of logic are saying is that if P is true and Q is true, (for that context), then P ^ Q is true. The predicate conjunction needs to be specified, and perhaps also the atomic statements, so that it isn’t ambiguous. Otherwise, the meaning leads the fallacy of predicate conjunction.

Eliminating & Introducing Disjunctions

The rules work differently for eliminating or introducing disjunctions than for conjunctions. With disjunctions if P is true, meaning Paul is married, then anything that is either true or false could be added as a disjunction and the statement will still be true. We’ll mark them with the ∨I or ∨E symbols when this is the meaning of the inference the way we did with conjunctions, first referring to the line where the supposedly true statement was made, a comma and then the type of rule being applied for the inference. So …

1 P

2. P ∨ Q (1, ∨I)

This is a valid statement. However, ∨E is always an invalid inference, we we will never use it. If Paul is married, Quinn could be a baked potat0, but it might also be true that Quinn was a baked potato. Let’s suppose that is false. If it had not been established that Paul was married and P meant Paul is married, and it was also unknown or false that Quinn was a baked potato, then …

1. (P ∨ Q)

2. P (1, ∨E) is invalid.

Complex statements can be checked for validity by breaking statements down into smaller parts, bracketing as appropriate, and often placing brackets within brackets and connectors as necessary. The procedure is to isolate from a main connector. The introductory disjunction and the introductory and eliminating conjunctions can be validated by working backwards from a main connective.

Mixing Conjunctions and Disjunctions

Moving brackets around works for conjunctions so long as they aren’t negated or mixed with disjunctions. Suppose we had said, “There is one Universe and there is no God or there are many Universes and there is a God.” Where should we put the parenthesis? First, let’s look at the simple sentences and assign symbols.

Let

O = “There is one Universe”

G = “There is a God”

M = “There are many Universes”

This provides no place for the elimination of a conjunction. Without parenthesis, the sentence above will look like this …

O ⋀ ~G ∨ M ⋀ G .

The problem with this form is that a methodical understanding of logic involves isolating one main connection at a time to determine a truth value. This is why parenthesis are needed. Now if we were to add parenthesis, making it match the intent of the pros assertion, where would we place them? Which of the following is correct?

1. O ⋀ (~G ∨ (M ⋀ G))

2. O ⋀ ((~G ∨ M) ⋀ G)

3. (O ⋀ ~G) ∨ (M ⋀ G)

4. ((O ⋀ ~G) ∨ M) ⋀ G

5. (O ⋀ (~G ∨ M)) ⋀ G

Avoiding Ambiguity

One of the options might work but the sentence above is ambiguous, making it difficult to tell which expression applies and the expressions are not equivalent. To be absolutely clear, we should always emphasize punctuation and language that separates ideas. A semicolon is stronger than a comma in separating ideas and might indicate where parenthesis should go, but context can also help, and we might use strong pivot words, like “even so” or “not withstanding”. What if we had said, “Perhaps it is possible that there is one Universe and there is no God, but there is reason to believe that even though it is not true that there is one Universe and that there is no God, as many suppose, it is actually true that there are many Universes and there is a God”? The context of the sentence, expressed this way, shows that the disjunction is surrounded by two conjunctive options as in interpretation 3. We might also ask ourselves why someone might say something to interpret in the most charitably rational way. Obviously, from a logical standpoint if there is only one Universe, there are not many Universes and if there is no God there is not also God. These would be logical contradictions.

This example presents a challenge for Pamalogy. If there is a Multiverse, then even if it were true that one Universe might have a God, it might also true that another did not. Possibly this would depend on what qualities and expectations might exist in one’s definition of God. Fortunately, Pamalogy is very clear about the definition of God as “that than which there could be nothing conceivably greater – Maximized Awesomeness.” Every conceivable Universe would be subject to the dominion of such a God. Therefore, either God exists in one Universe or God exists in many.

Charity also avoids straw men. To presume there was a non sequitur would be uncharitable. The expression “reason to believe that” which I’ve added here shows that this is not a complete proposition. It would be a non sequitur if it was a complete proposition but it isn’t. Here, all the statement points to is that “or” statements don’t require both disjuncts to be true. We can see that only one side could be true or there would be a contradiction both as to the number of Universes and as to whether there is a God. As an “or” statement, for those who find reason to believe there is no God, yet believe in a Multiverse, and for those who believe that there is a God, yet believe in just one Universe, the burden of proof remains on us in giving the expression “there is reason to believe.” If you would like to know what those reasons are, then you should take our free Pamalogy 101 Course.

Using Neither Nor

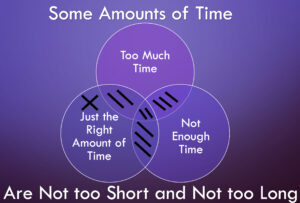

One clear way to indicate where parenthesis should go is through neither/nor statements. Take the example, “Neither is there too much time nor is there too little time.” Apparently this indicates there is just the right amount of time, but let’s consider the form.

Let

M = “there is too much time”

L = “there is too little time”

M ∨ L = “There is too much time or there is too little time”

~(M ∨ L) = “Neither is there too much time nor is there too little time.”

This gives us our expression. So, what would make it false? If either M was true, that there was too much time, or if L was true, that there was not enough time, then it would be false. Now it might seem that law of non-contradiction would apply for this statement since too much and too little are opposites, but that is not the case. There is situation where just the right amount of time might be true. In fact, this latter notion is assumably the precise point of making the statement. So what is actually meant is that there are multiple conditions that could either be true or false, pertaining to some criteria for the truth of the statement that are best expressed in a Venn Diagram as follows.

An X mark in a Circle means there is something in that category. Shading means that there is nothing in a category. Looking at the Venn Diagrams above, we can see that it is logically possible that there could be neither enough nor too little time, even if the reference is to all time. The law of non-contradiction does not apply even if sometimes there isn’t enough time and sometimes there is too much time so long as sometimes there is just the right amount of time.

Logic is Not Math

Now, what about if we treated the statement like an addition problem by negating everything in an equation equally? Is ~(M ∨ L) ≡ ~M ∨ ~L ??? In this case the latter statement would mean “there is not too much time or there is not too little time.”

Would this have the same meaning as “neither is there too much time nor is there too little time”? Well, no. The “or” statement allows either side to be true or neither for the statement to be true. In an inclusive “or” statement, only if both sides are false is the statement false since neither side is true. ~M ∨ ~L is therefore the wrong way to remove the parenthesis because it doesn’t mean neither M nor L. The right way is with an “and” statement since both M and L are negated: ~M ^ ~L . In a Neither/Nor expression both ~M and ~L must be true. So …

- ~M ≡ “There is not too much time”

- ~L ≡ “There is not too little time”

Here again then we run into ambiguity. In order for both ~M and ~L to be true, how does that look on a Venn Diagram? Well, it will look a lot like the second Venn Diagram above, only it doesn’t matter whether there is anything in the “just the right amount of time” category. In fact, there really doesn’t need to be a third category if there is no such thing as time.

Not Both vs Both Not – We run into the same problem of logic not being exactly like math when we negate a conjunction. Unlike math, we don’t negate both sides of a conjunction when we remove the parenthetical expression. So let’s say there is one donut left in the freezer and there are three people in the house that might eat it, Olivia, Paul and Quinn. Referring to that donut, I can confidently say “Paul and Quinn won’t both eat that whole donut.” After all, if they split the donut, then neither of them will eat the whole donut, and if Olivia eats the donut, neither of them will eat the whole donut so the only way either one of them can eat a whole donut is if one or the other of them doesn’t have any donut at all and neither does Olivia. But we’ll focus on the statement, “Paul and Quinn won’t both eat that whole donut”. We’ll refer to this as a “not both” statement. It is not to be confused with “both not“. “Both not” is equivalent to “neither nor, as in the previous section.

Let

P = “Paul will eat that whole donut”

Q = “Quinn will eat that whole donut”

S = Statement

~(P ^ Q)

1. S = ~(P ^ Q)

2. S ≠ ~P ^ ~Q (from 1)

3. S = ~P ∨ ~Q (from 1)

Why is 2 invalid but 3 is valid? Because if it is true that neither Paul nor Quinn will both eat that whole donut as expressed in 1, yet the possibility exists that either Paul could eat that whole donut or Quinn could, then that is a very different thing than saying that Paul won’t eat that whole donut and Quinn won’t (“both not” – AKA “neither nor“) because if that were true, then neither one of them could eat that donut. This is not a neither/nor expression. It is a “not both” expression rather than a “both not“. Line 3 provides the equivalent instead. Either not Paul being true or not Quinn being true (or neither Paul nor Quinn being true since this is an inclusive or) will amount to the same thing as “not both” that we have in line 1. S means not P and Q. That’s not the same thing as neither P nor Q.

DeMorgan’s Law (DEM) – deals with the functional equivalency ( ≡ ) between the two expressions of neither nor and the two expressions of not both.

- ~P ^ ~Q (“neither nor” AKA” both not”)

- ~(P ∨ Q) (1, DEM)

- ~P ^ ~Q (2, DEM)

- ~P ∨ ~Q (“not both”)

- ~(P ^ Q) (1, DEM)

- ~P ∨ ~Q) (2, DEM)

Make sure you don’t confuse “both not” with “not both.

Checking Disjunctions with Truth Tables

To check whether Neither/Nor statements are the same or different than “both not” statements and how these are both different than “not both” statements we can test them by comparing their respective Truth Tables. Build column by column left to right from the most simple parts, then include that result as part of the more complex part of the statement until the main operator is reached for the compound statements being compared, as follows …

| P | Q | ~P | ~Q | P ^ Q | P v Q | ~(P v Q) | ~P ^ ~Q | ~(P ^ Q) |

|---|---|---|---|---|---|---|---|---|

| T | T | F | F | T | T | F | F | F |

| T | F | F | T | F | T | F | F | T |

| F | T | T | F | F | T | F | F | T |

| F | F | T | T | F | F | T | T | T |

Functional Equivalence and how to check for it.

The fact that “neither/nor” (usually made clear as ~(P ∨ Q) is the same as “both not”, as shown in the table above is called “functional equivalence” and is indicated by the triple bar ( ≡ ). Whenever there is functional equivalence for statements of any kind, the truth value as seen in a Truth Table will be the same on each row in the columns for two functionally equivalent statements being compared. Note that some of functional equivalencies may involve complex nested disjunctions that would serve as axioms. For instance:

A & (B ∨ C) ≡ (A & B) ∨ (A & C)

| A | B | C | B ∨ C | A ^ (B ∨ C) | A ^ B | A ^ C | (A & B) ∨ (A & C) |

|---|---|---|---|---|---|---|---|

| T | T | T | T | T | T | T | T |

| T | T | F | T | T | T | F | T |

| T | F | T | T | T | F | T | T |

| T | F | F | F | F | F | F | F |

| F | T | T | T | F | F | F | F |

| F | T | F | T | F | F | F | F |

| F | F | T | T | F | F | F | F |

| F | F | F | F | F | F | F | F |

Functional Equivalence also exists for A ∨ (B & C) ≡ (A ∨ B) & (A ∨ C)

| A | B | C | B & C | A v (B & C) | (A v B) | (A v C) | (A v B) & (A v C) |

|---|---|---|---|---|---|---|---|

| T | T | T | T | T | T | T | T |

| T | T | F | F | T | T | T | T |

| T | F | T | F | T | T | T | T |

| T | F | F | F | T | T | T | T |

| F | T | T | T | T | T | T | T |

| F | T | F | F | F | T | F | F |

| F | F | T | F | F | F | T | F |

| F | F | F | F | F | F | F | F |

Disjunctive and Conjunctive Distribution

Functional Equivalency leads to distribution so that if the statement is a premise, it can be referred to and the functional equivalent may be distributed. (Always note with the premise sign, and DIST symbol separated by a comma). For example, in the first example above:

- A ^ (B ∨ C)

- (A ^ B) ∨ (A ^ C) (1, DISTRIB)

Distribution works in either direction. So the same functional equivalence also allows this:

- (A ^ B) ∨ (A ^ C)

- A ^ (B ∨ C) (1, DIST)

The above are valid arguments using conjunctive distribution. The arguments below use disjunctive distribution …

- A ∨ (B ^ C)

- (A ∨ B) ^ (A ∨ C) (1, DISTRIB)

and …

- (A ∨ B) ^ (A ∨ C)

- A ∨ (B ^ C) (1, DISTRIB)

but watch out mixing these together. The following arguments mix conjunctive premises with invalid distributive inferences …

Conjunctive premise – Invalid Disjunctive Distribution…

- (A ∨ B) ^ (A ∨ C)

- A ^ (B ∨ C) (1, DISTRIB)

- (A ∨ B) ^ (A ∨ C) (2, DISTRIB)

Disjunctive premise – Invalid Conjunctive Distribution…

- A ∨ (B ^ C)

- (A ∨ B) ∨ (A ∨ C) (1, DISTRIB)

- A ∨ (B ^ C) (2, DISTRIB)

Process of Elimination

The disjunctive syllogism (DS) is a practical tool for logic, more commonly called process of elimination. Let’s say there was a weeding and either Paul got married or Quinn, and you find out it wasn’t Quinn. The argument will look like this…

- P ∨ Q

- ~Q

- P (1, 2 DS)

If it had not been Paul, then

- P ∨ Q

- ~P

- Q (1, 2 DS)

The order of the first two premises don’t matter. Disjunctive syllogism works for complex statements separated by a main connector and they are valid so long as the negated disjunctive is the main connector. The following complex disjunction is an invalid syllogism…

- P ∨ ~(Q ∨ R)

- ~R

- P ∨ ~Q (1, 2 DS)

Don’t Affirm the Disjunct! – be careful of this fallacy. “Inclusive or” means either one or both may be true and the statement will be true. The following argument is invalid unless the “exclusive or” has been specified …

- P ∨ Q

- P

- ~Q

Also watch out for the fallacy of a false dilemma here. Why should the first premise be true? Are there other options? Distinction without a difference is common with disjunctive syllogisms. They are valid but often unsound. The fallacy of affirming the disjunct, on the other hand is neither valid nor sound.

Nomenclature of Categorical Logic

So far, we’ve focused on propositional logic and its symbolic usage, covering the most common types of arguments. The words, “and”, “or” and “not” will take on different meaning in categorical logic. You can tell if an argument is categorical, rather than propositional when you see words like “is” and “are preceding “some”, “all” or “none.”

Subjects and Predicates versus Statements

In propositional logic we deal with sentences that are statements or propositions (P) and propositions can be designated with any symbol so they can serve as variables in arguments. In categorical logic we break sentences down into subject (S) and predicate (P). It is common in abstract propositional logic to talk about the variables P, Q and R, starting with the letter P, for “proposition”. To avoid confusion between “P” as predicate in categorical logic and “P” as proposition in propositional logic, we’ve mostly used A, B, C etc. or other variables, rather than P, Q and R, etc. for propositional logic. In categorical logic, the difference between subject and predicate is fundamental because it involves say something about a subject. Every statement has a subject and a predicate. And for categorical logic, Venn Diagrams compare two things.

Use of Capital & Lower Case Letters for the Symbols of Categorical Logic

We will attempt to consistently use lower case letters for specific items belonging to categories and upper case letters for the categories themselves. Notice the difference between the use of caps in propositional logic, which refer to atomic statements that may involve a subject and a predicate (P), while with categorical logic the subject may be denoted with a lower case letter if it is a member (s) of a set (S) and the subject would normally be referred to with an upper case letter if it referred to the set itself. The predicate is also indicated in upper case. Thus …

“Jimmy cracked corn”

j = a specific member in the category of names that begin with J

J = the category of names that begin with the letter J

C = Cracked Corn

s = specific member in the subject category

S = subject that is the category

P = what is predicated of the subject. What did we say about s and/or S?

Existentials and Universals

An Existential is something that exists (some). In logic “some” means at least one. Existentials, also known as “particulars“, stand in contrast to Universals (all & none). For Venn Diagrams with two circles, there are two types of particular statements – affirmative and negative.

Particular Negative: Some A are not B – These are called “O” sentences. This means there is something outside the intersection.

Particular Affirmative: Some A are B – These are called “I” sentences. Particular affirmative statements mean there is something inside the intersection between two category circles in a Venn Diagram.

There are also two types of Universal statements – negative and affirmative…

Universal Negative: No A are B – These are called “E” sentences. The intersection is empty and/or a category is excluded.

Universal Affirmative: All A are B. – These are called “A” sentences. All of a category is included

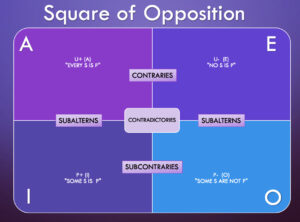

Most people learn this in the order of the vowels AEIO and place them in a square. The AEIO designations are useful for nomenclature when making logical arguments because we can reduce them to a single symbol. The designations are historical and their original meaning don’t matter. We will inherit the traditional designation of AEIO here, where …

U+ …. U-

P+ …. P-

U+ = A; U- =E; P+ = I; P- = O

Contradictions and Distributions

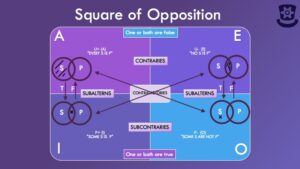

The AEIO logic square is also referred to as the Square of Opposition. It is useful for determining valid and invalid distributions, contrary statements and logical possibilities and contradictions.

Contradiction – There can’t be both something and nothing in a category so there should never be an X in a shaded area of a Venn Diagram, but other types of opposites in the AEIO square are valid …

- Contraries – “every A is a B” ^ “no A is a B” (Universal Affirmative vs Universal Negative) = invalid

“no A is a B” ^ “some A is a B” (Universal Negative vs Particular Affirmative) = invalid

“every A is a B” ^ “some A are not B” (Universal Affirmative vs Particular Negative) = invalid - SubContraries – “some A is a B” ^ “some A is not a B” (Particular Affirmative vs Particular Negative) = valid

- Affirmative SubAlterns – “every A is a B” ^ “some A is a B” ( Universal Affirmative vs Particular Affirmative) = valid

- Negative SubAlterns – “no A is B” ^ “some A is not B” (Universal Negative vs Particular Negative) = valid

Contraries, Subcontraries and Subalterns– the square of opposition shows A contradicts E . It can’t simultaneously be true that all S are P and no S are P. There is also a contradiction between A and O. It can’t be said both that all S are P and some S are not P. Similarly, E and I are contradictory. It can’t both be said the no S is P and some S is P. A and E are universal contraries. A and O, as well as E and I are contradictories but they are subalterns.

Particulars are subcontraries. Between I and O statements, one or both will be true. For instance, it may be said that “some S is P” and also said that “some S are not P”. Both statements could be true but only one can be said to be true with certainty and we won’t know which one without more information.

Notice also how the subalterns are true from Universals to particulars for both A and I and for E and O, but false for particulars to universals. In other words, it may be true that if “all S are “P, then “some S are “P, but to say “some S are P” does not mean that “all S are P”. Saying so might be true but it is not a proof, so it would be invalid. Similarly, If O is true, E is not necessarily true. That is, it may be true that if “no S are P”, then “Some S are not P”, but reversing the statement by saying “some S are not P” doesn’t indicate that “no S is P”.

Such is the nature of subalterns. They work from Universals to particulars from A to I and from E to O, but not from particulars to Universals from I to A or from O to E.

I and O are subcontrary They can both be true but there is not enough information to say so. However, if “some S is P” then either it is true or it is not true that “some S are not P” Similarly, if “some S are not P” then it is either true or not true that “some S are P” One statement may be true, or the other, and both also could be true, but there is not enough information for certainty concerning both. All we have between I and O is a disjunct. This disjunctive relationship between I and O is summed up with the term “subcontraries.”

Quantifier Negation Laws (QN) – Closely related to the subcontrary laws of opposition, there are also laws of negation that involve equivalencies. Consider the following logical equivalents:

No S are P ≡ All S are non-P

Not All S are P ≡ Some S are not P

We can use this equivalence to make inferences in logical statements.

- No S are P

- All S are non-P (1, QN)

- No S are P (2, QN)

- Not All S are P

- Some S are not P (1, QN)

- Not All S are P (2, QN)

It’s useful to know also that DeMorgan’s Law can apply to series of conjunctions and disjunctions when considering sets.

~(S1 is P ∨ S2 is P ∨ …) ≡ ~(S1 is P) ^ ~(S2 is P) ^ …

~(S1 is P ^ S2 is P ^ …) ≡ ~(S1 is P) ∨ ~(S2 is P) ∨ …

Applied Universal Instantiation (AUI) – If all members of a subject category (S) are members of a predicate category (P), then any instance (x) that is a member of subject category (S) is also an instance of the Predicate category (P).

- All S are P

- x is S

- x is P (1, 2 AUI)

Existential Generalization (EG) – If there is an instance (s), which is part of a subject (S), then some s are S. And furthermore, if an instance (s) is a member of a predicate (P), then some S are P. So, for the instance x …

- x is S

- Something is S (1 EG)

- x is S

- x is P

- Some S are P (1, 2 EG)

Conversions

Switching subject and predicate is valid for E and I statements – ie. Universal Negatives (E) and Particular Affirmatives (I).

Switching subject and predicate is invalid for A and O statements – ie. Universal Affirmative (A) and Particular Negatives (O).

So …

Universal Negatives (E) – Conversion is VALID

1. No S are P

2. No P are S (1, CONV)

Particular Affirmatives (I) – Conversion is VALID

1. Some S are P

2. Some P are S (1 CONV)

Universal Affirmatives (A) – Conversion is INVALID

1. All S are P

2. All P are S (Not Valid)

Particular Negatives (O) – Conversion is INVALID

1. Some S are not P.

2. Some P are not S (Not Valid)

Obversions

Switching from affirmative to negative or from negative to affirmative, while also either adding or subtracting a negation from the predicate is valid for A, E, I and O as follows …

Universal Affirmatives (A) – Obversion is VALID

1. All S are P

2. No S are non-P (1, OBV)

Universal Negatives (E) – Obversion is VALID

1. No S are P

2. All S are n0n-P (1, OBV)

Particular Affirmatives (I) – Obversion is VALID

1. Some S are P

2. Some S are not non-P (1, OBV)

Particular Negatives (O) – Obversion is VALID

1. Some S are not P

2. Some S are non-P (1, OBV)

Contrapositions

Switching the order of subject and predicate in a statement, while also switching from affirmative to negative or negative to affirmative for the subject, and also either adding or subtracting a negation from the predicate is called the “contraposition of a categorical.” It is valid for A and O statements but invalid for E and I statements as follows …

Universal Affirmatives (A) – Contraposition of Categorical is VALID

1. All S are P

2. All non-P are non-S (1, CTRA-CG)

Universal Negatives (E) – Contraposition of Categorical is INVALID

1. No S are P

2. No non-P are non-S (1, CTRA-CG) – [All P are non-S; non sequitur]

Particular Affirmatives (I) – Contraposition of Categorical is INVALID

1. Some S are P

2. Some non-P are non-S (1, CTRA-CG) [non sequitur]

Particular Negatives (O) – Contraposition of Categorical is VALID

1. Some S are not P

2. Some non-P are not non-S (1, CTRA-CG)

Nomenclature of Identity

The Law of Identity – It is obvious that everything is equal to itself, but we will use a lower case to describe a particular thing, x = x. For example, if Bob is tall, then b is T. This law starts to matter when considering the Indiscernability of Identicals (=E), and the symmetry and transitivity that stem from them. That is, if something has more than one name, x, and another name, y, then anything that could be said about x can be said about y, so we can eliminate (=E) the double identity and treat it as one, which allows us to apply any proper. So …

- x is P

- x = y

- y is P (1, 2 =E)

Leibniz’ Law – Pamalogy involves metaphysics. There is a controversial reverse side of the indiscernability of identicals, which Leibniz referred to as the “identity of indiscernibles.” This means that if something has all the same properties as something else, then the identity is identical. It is not something else.

Symmetry – Because of the law of identity, if x = y, then y = x.

- x = y

- x= x (=I)

- y = x (1, 2 =E)

Transitivity – Transitivity adds yet another name for the same identity.

- x = y

- y = z

- x = z (1, 2 =E)

Categorical Syllogisms (CS)

An argument with three categorical propositions (two premises and a conclusion) that has three categorical terms, each of which are used twice, is called a categorical syllogism. Categorical syllogisms are a specific and very common type of argument. Conventionally we we will put it into standard form.

Standard Form – The standard form for categorical syllogisms follows the natural pattern for pulling formal statements from pros statements. First we identify the conclusion. The conclusion will have a subject (S) and a predicate (P). The first premise will say something about P using a middle term, something like, “Some M are P.” The second premise will say something about the subject, maybe something like, “All S are M.” And then the conclusion will use inferences from M to say something like, “Some S are P.” You can see from this standard format that there are three categorical terms in a standard form.

The three categorical terms are the major term, the minor term and the middle term. The minor term and the major term will appear in the conclusion as its subject and predicate. The middle term will appear in the premises to add information about the minor and major terms which constitute the subject and predicate of the conclusion. These are usually designated as S (subject), P (premise) and M (middle term). According to the Medieval logicians Copi and Cohen, in order to be valid, there are six requirements for categorical syllogisms:

- All categorical syllogisms in standard form must have exactly three unambiguous categorical terms.

- The middle term must be distributed in at least one premise.